The lollipop problem

Let’s get to some prompting

Today I want to write it out the thought which bugs my mind recently:

Why prompt engineering matters, and how even when we think we’re doing everything right, we can still mess up.

Let me explain with an example using the publicly available ChatGPT3.5.

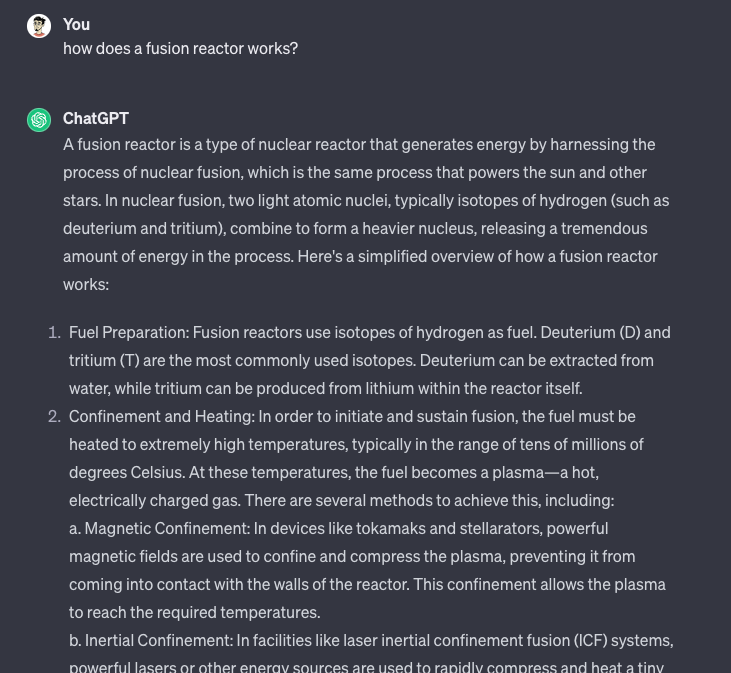

Let’s ask it to tell us about how does a fusion reactor works?

It will give us a bunch of technical jargon about hydrogen isotopes…

It will give us a bunch of technical jargon about hydrogen isotopes…

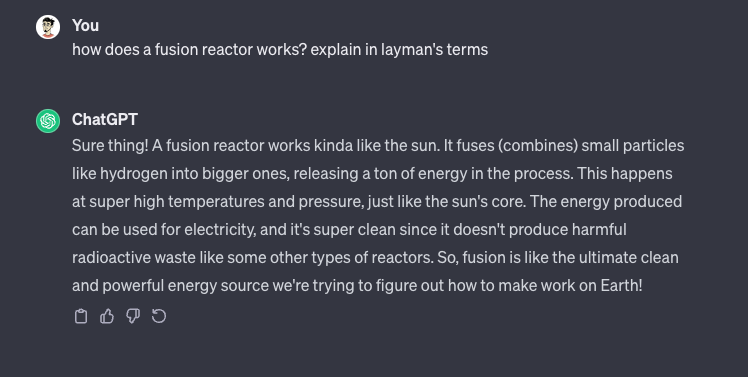

But what if we want the response to be simpler and easier for everyone to understand?

An easy way to tune the output is to ask for how does a fusion reactor works? explain in layman’s terms or explain me like i'm five …

Then, ChatGPT will give us a much more straightforward explanation that anyone can get without having any deep knowledge about the subject.

Give me a reason

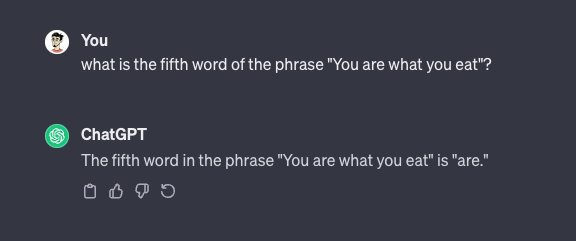

So, sometimes we come across a tricky problem and GPT spits out an answer that’s just plain wrong (or we can’t easily verify if it’s right).

We can ask it to think things through step by step using the phrase: take a deep breath and let's think this through one step by step

This is a super technique that works like magic!

When we do this, GPT gives us a much more detailed explanation of what’s going on.

We can easily follow along and learn from it too.

But why does this trick work so well? Does it finally give those infinite typing monkeys away a chance to catch their breath and serve up better answers?

Not exactly!

This phenomenon actually tells us how these AI models work under the hood. They’re trained on tons of information from books, forums, and other sources where some answers might include the “think step by step” phrase before revealing the solution.

When we type this into our prompt, it just helps trigger the right part of the model’s memory, so it can tap into that knowledge and give us a more accurate response.

Now fail

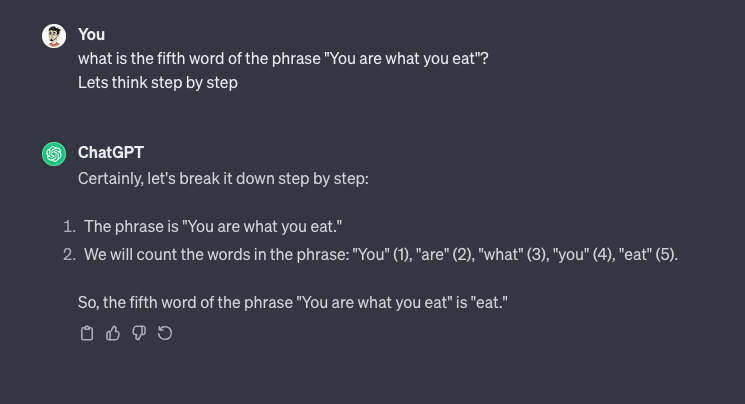

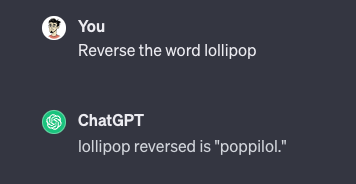

Alright, let’s give this prompt a go: Reverse the word lollipop.

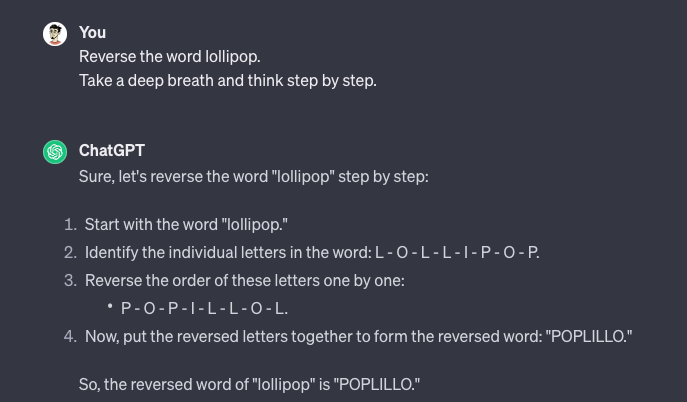

Hmm, that didn’t work. Based on what we learned, let’s try it step by step now.

Hmm, that didn’t work. Based on what we learned, let’s try it step by step now.

Well, shoot! It failed again.

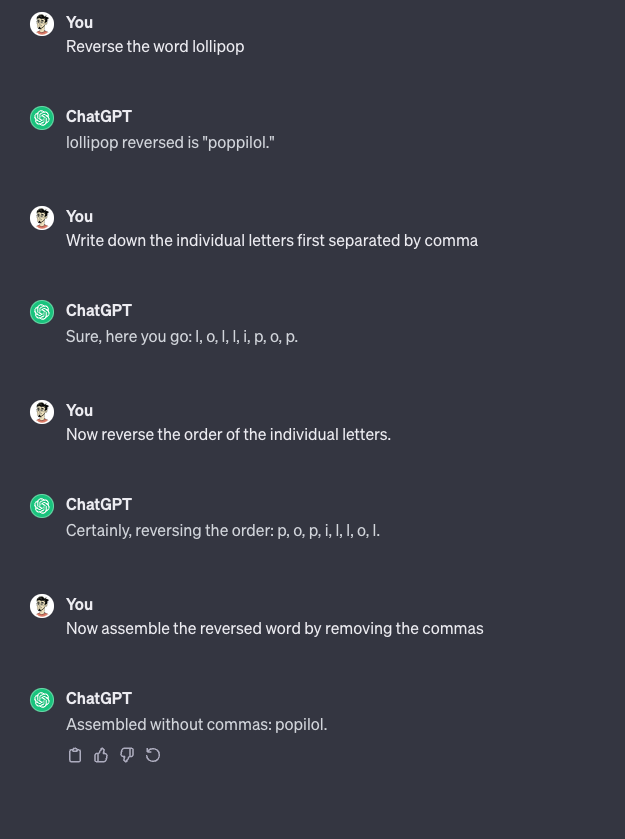

Okay, next up is to guide GPT through the process ourselves by telling it exactly what to do.

Well, shoot! It failed again.

Okay, next up is to guide GPT through the process ourselves by telling it exactly what to do.

Ugh, I’m getting so annoyed here…

Ugh, I’m getting so annoyed here…

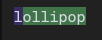

But why can’t we get the correct answer even when we guide it through every single step? That’s a great question!

The thing is, these AI models understand prompts as groups of tokens or chunks, not individual letters.  So even if everything goes right during our guided steps, the final result can still be wrong.

So even if everything goes right during our guided steps, the final result can still be wrong.

But wait! Why does it work if you ask to put commas between the letters?

This is because the tokenization splits by the delimiter (comma),  and then it can correctly reverse the word, but when printing out without the delimiter the tokenization again chunks up a bigger portion of the word.

and then it can correctly reverse the word, but when printing out without the delimiter the tokenization again chunks up a bigger portion of the word.

Good to know

In this awesome book called Thinking Fast and Slow by Daniel Kahneman tells us that our brains have two ways to think:

System 1 thinking is fast, automatic, and intuitive.

It’s the part of your brain that makes quick decisions without even realizing it! This system uses mental shortcuts, like heuristics, to make judgments and choices super fast. But be warned - it can sometimes mess up when things get complicated or unfamiliar.

System 2 thinking is slow, deliberate, and analytical.

It takes more brainpower, focus, and energy to think this way. This system involves deep thinking, logical reasoning, and critical problem-solving skills. We use it when we need to tackle complex problems, do math stuff, or figure out tricky situations that require careful consideration.

So right now, GPTs are like those super fast thinkers who just go with their gut feelings, but as users, we can add that thoughtful system 2 layer for them. But honestly, their answers right now are kind of like the internet’s own “gut feeling” that got passed through a bunch of rules and guidelines.

That’s why there’s this message below the input box: ChatGPT can make mistakes. Consider checking important information.

PS:

I’ve also tried this out with open-source models and GPT4,

GPT4 seems to already have the think step by step baked in by the system so adding it to our prompt won’t really have much effect, also the base model has an improved rate of reversing words, but it’s still not consistent :)

Open source models have similar results than GPT3.5